The stock market bubble inflated by AI hype since late 2022 might finally be popping. If this week’s reversals turn into a sustained downtown, analysts might look back at the actions of tech executives and Trump adminstration figures this week as the straw that finally broke the camel’s back.

The warnings have been coming for a while.

Ed Zitron (on the business side) and Gary Marcus (on the technical side) have been warning about the AI bubble for years now.

Even rubes such as myself noticed when 7 AI-fueled stocks exceeded 50% of NASDAQ’s market cap.

OpenAI CEO Sam Altman has been warning of an AI stock market bubble since August.

Dumbass META boss Mark Zuckerberg started saying bubble a month or so later.

JPMorgan’s Michael Cembalest noted that AI-related stocks have accounted for 75 percent of S&P 500 returns and 80 percent of earnings growth since ChatGPT launched in November 2022.

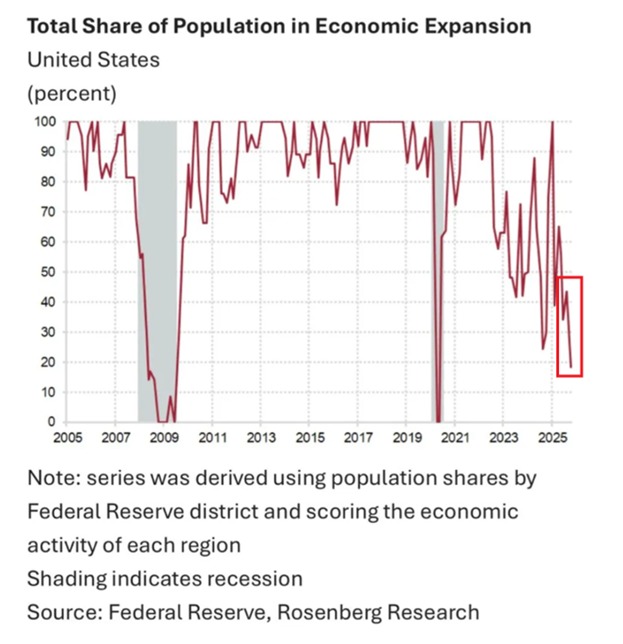

Harvard economics prof Jason Furman pointed out in late September that U.S. GDP growth in the first half of 2025 would have been 0.01% without AI capex investment.

Yet Another Bad News Cycle for AI

Meanwhile the litany of bad headlines for AI continued.

This is just a sampler and just from this week:

- New data shows companies are rehiring former employees as AI falls short of expectations Axios

“For every $1 a firm saves from layoffs, it spends $1.27”

- Largest study of its kind shows AI assistants misrepresent news content 45% of the time – regardless of language or territory BBC

“This research conclusively shows that these failings are not isolated incidents. They are systemic, cross-border, and multilingual, and we believe this endangers public trust. When people don’t know what to trust, they end up trusting nothing at all, and that can deter democratic participation.”

- AI Is Supercharging the War on Libraries, Education, and Human Knowledge 404 Media

“AI is a direct attack on the way we verify information: AI both creates fake sources and obscures its actual sources. That is the opposite of what librarians do, and teachers do, and scientists do, and experts do.”

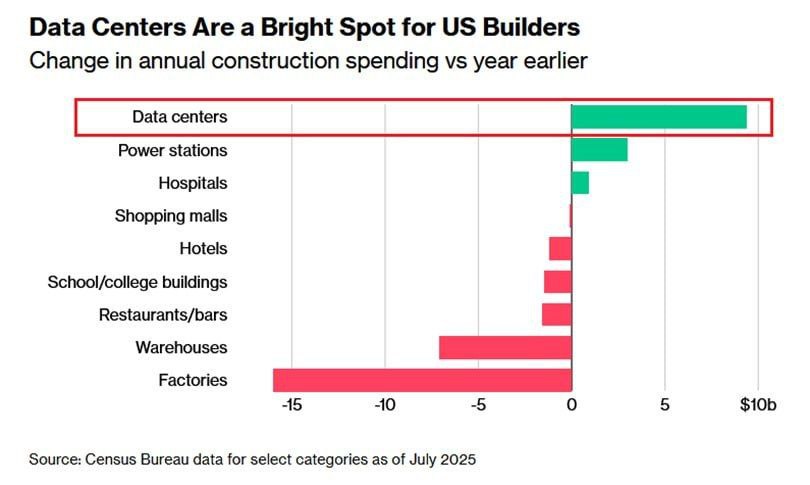

- The AI Data Center Boom Is Warping the US Economy Wired

“Microsoft, Alphabet, Meta, and Amazon are investing tens of billions in data centers. AI infrastructure is now a key driver of US economic growth.”

The Big Short Comes For AI

On Monday, November 3, legendary short seller Michael Burry shorted Nvidia, the chipmaker at the heart of the AI/LLM mania, and Palantir, the AI-powered government contractor.

As of Friday, he’s up about $1B.

Going for That Government Money

That’s when the AI hucksters blinked.

Well, Sam Altman had already blinked, flipping out at podcaster Brad Gerstner and walking out after a testy exchange:

Brad Gerstner: “How can a company with $13 billion in revenues make $1.4 trillion of spend commitments? You’ve heard the criticism, Sam.”

Sam Altman: If you want to sell your shares, I’ll find you a buyer. Enough.

I think there’s a lot of people who talk with a lot of breathless concern about our compute stuff or whatever that would be thrilled to buy shares. We could sell your shares or anybody else’s to some of the people who are making the most noise on Twitter about this very quickly.

We do plan for revenue to grow steeply. Revenue is growing steeply. We are taking a forward bet that it’s going to continue to grow and that not only will ChatGPT keep growing, but we will be able to become one of the important AI clouds, that our consumer device business will be a significant and important thing, that AI that can automate science will create huge value.

We carefully plan. We understand where the technology, where the capability is going to grow and how the products we can build around that and the revenue we can generate. We might screw it up. This is the bet that we’re making and we’re taking a risk along with that. A certain risk is if we don’t have the compute, we will not be able to generate the revenue or make the models at this kind of scale.

Palantir CEO Alex Karp went on CNBC’s “Squawk Box” on Tuesday and was asked about Burry’s bet:

“The two companies he’s shorting are the ones making all the money, which is super weird. The idea that chips and ontology is what you want to short is batshit crazy. He’s actually putting a short on AI. … It was us and Nvidia. I do think this behavior is egregious and I’m going to be dancing around when it’s proven wrong. It’s not even clear he’s shorting us. It’s probably just, ‘How do I get my position out and not look like a fool?’”

Wednesday OpenAI CEO Sam Altman went on the Conversations with Tyler podcast and openly called for a government backstop:

“ When something gets sufficiently huge … the federal government is kind of the insurer of last resort, as we’ve seen in various financial crises … given the magnitude of what I expect AI’s economic impact to look like, I do think the government ends up as the insurer of last resort.”

That same day, OpenAI’s CFO Sarah Friar echoed the same message at a Wall Street Journal technology conference.

The Journal led its story with “OpenAI Chief Financial Officer Sarah Friar said that …the company hopes the federal government might backstop the financing of future data-center deals.”

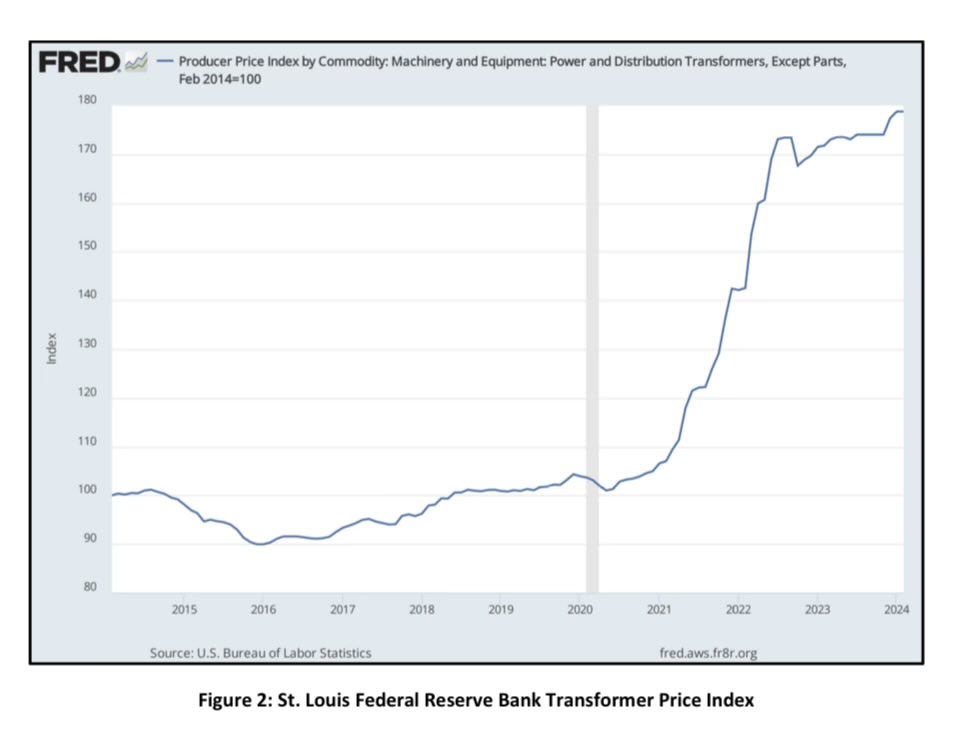

As OpenAI ramps up its spending on data center capacity to unheard of levels, the company is hoping the federal government will support its efforts by helping to guarantee the financing for chips behind its deals, Friar said. The depreciation rates of AI chips remain uncertain, making it more expensive for companies to raise the debt needed to buy them.

“This is where we’re looking for an ecosystem of banks, private equity, maybe even governmental, the ways governments can come to bear,” she said. Any such guarantee “can really drop the cost of the financing but also increase the loan-to-value, so the amount of debt you can take on top of an equity portion.”

Friar said OpenAI could reach profitability on “very healthy” gross margins in its enterprise and consumer businesses quickly if it weren’t seeking to invest so aggressively.

“I’m not overly focused on a break-even moment today,” she said. “I know if I had to get to break-even, I have a healthy enough margin structure that I could do that by pulling back on investment.”

OpenAI is losing money at a faster pace than almost any other startup in Silicon Valley history thanks to the upside-down economics of building and selling generative AI. The company expects to spend roughly $600 billion on computing power from Oracle, Microsoft, and Amazon in the next few years, meaning that it will have to grow sales exponentially in order to make the payments. Friar said that the ChatGPT maker is on pace to generate $13 billion in revenue this year.

Friar realized immediately she’d screwed up and went to LinkedIn to course correct:

Unfortunately for Friar, she couldn’t take it back nor did she address the other dumb things she said at the WSJ confab, per Bloomberg:

“I don’t think there’s enough exuberance about AI, when I think about the actual practical implications and what it can do for individuals. We should keep running at it.”

Regarding charts like this that argue that many of the AI industry’s recently announced deals are just a circular money-go-round, Friar said:

“We’re all just building out full infrastructure today that allows more compute to come into the world. I don’t view it as circular at all. A huge body of work in the last year has been to diversify that supply chain.”

Thursday, Nvidia CEO Jensen Huang flagrantly linked the fortunes of Amercian AI companies to American national security, telling the Financial Times that “China is going to win the AI race.”

The Nvidia chief said that the west, including the US and UK, was being held back by “cynicism”. “We need more optimism,” Huang said on Wednesday on the sidelines of the Financial Times’ Future of AI Summit.

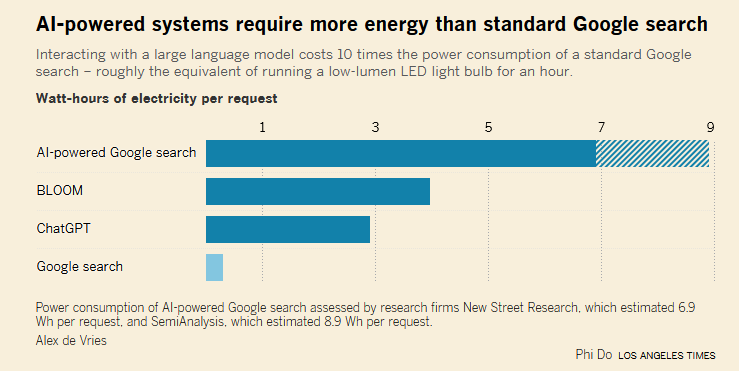

Huang singled out new rules on AI by US states that could result in “50 new regulations”. He contrasted that approach with Chinese energy subsidies that made it more affordable for local tech companies to run Chinese alternatives to Nvidia’s AI chips. “Power is free,” he said.

Gary Marcus was on it fast, pointing out that he’d been warning that the AI bros would go for government funding since January:

Former Blackrock ace Edward Dowd quickly called out the scam as well.

Dowd also warned that:

A cluster of 3 Hindenburg Omens and Altman & Jenson signaling the end is near on the AI bubble by asking for taxpayer assistance does not bode well for the short term on $SPX.

Should Trump green light government assistance and we get a pump it will likely be faded as it will not be nearly enough. Congress has true purse strings.

The stink of desperation is in the air to keep the headline indices afloat with 7 AI stocks. Ends badly at some point.

Sam Altman went into backtracking mode too.

I’d quote the whole thing but it’s mostly bullshit and Altman is a known liar (just check out this 62 page deposition from OpenAI co-founder Ilya Sutskever which references Altman’s “consistent pattern of lying”).

Altman’s claims were complicated when this October 27 letter from OpenAI’ Chief Global Affairs Officer to Michael Kratsios, Executive Director of the U.S. government’s Office of Science and Technology Policy emerged. The letter says (via Simp for Satoshi):

The Administration has already taken critical steps to strengthen American manufacturing by extending the Advanced Manufacturing Investment Credit (AMIC) for semiconductor fabrication. OSTP should now double down on this approach and work with Congress to further extend eligibility to the semiconductor manufacturing supply chain; grid components like transformers and specialized steel for their production; AI server production; and AI data centers. Broadening coverage of the AMIC will lower the effective cost of capital, de-risk early investment, and unlock private capital to help alleviate bottlenecks and accelerate the AI build in the US.

Counter the PRC by de-risking US manufacturing expansion. To provide manufacturers with the certainty and capital they need to scale production quickly, the federal government should also deploy grants, cost-sharing agreements, loans, or loan guarantees to expand industrial base capacity and resilience.

Altman spoke to Reuters to “clarify”:

OpenAI has spoken with the U.S. government about the possibility of federal loan guarantees to spur construction of chip factories in the U.S., but has not sought U.S. government guarantees for building its data centers, CEO Sam Altman said on Thursday.

Altman said the discussions were part of broader government efforts to strengthen the domestic chip supply chain, adding that OpenAI and other companies had responded to that call but had not formally applied for any financing. He said the company believes taxpayers should not backstop private-sector data center projects or bail out firms that make poor business decisions.

…

Tech officials argue that these investments are tantamount to a national security asset for the U.S. government [Reuters supplies no source for this argument. Nat], given AI’s growing role in the U.S. economy. OpenAI has committed to spend $1.4 trillion building computational resources over the next eight years, Altman said Thursday.

Regardless of Altman’s backpedaling, the whole thing became moot after the Trump administration shut down talk of AI bailouts.

Trump Tech Czar Slams That Door Shut

David Sacks, the White House’s AI czar (and founding member of the PayPal mafia alongside Elon Musk and Peter Thiel) was quick to shut this talk down, tweeting Thursday morning:

I have to wonder if Sacks’ statement — which was a political must following GOP losses in Tuesday’s elections — might not be a Lehman Brothers moment for AI and the larger stock market bubble.

Ed Zitron’s latest report won’t stop the bleeding:

Based on analysis of years of revenues, losses and funding, from 2023 through 1H2025, OpenAI took in $28.6bn in cash and lost $13.7bn.

It was just reported that OpenAI ended 1H 2025 with $9.6bn in cash.

OpenAI has burned $4.1bn more than we thought.

And as long as we’re risking 2008 flashbacks, never forget that in 2023 the infamous Larry Summers joined the OpenAI board. I’m shocked Larry hasn’t already saved the day.

Sarcasm aside, this may be the beginning of the end for the Interregnum of Unreality that I posited began in 2008.

Let’s lay out the big picture for LLM-style AI.

Let’s lay out the big picture for LLM-style AI.